Google IO '25 Keynote

Google I/O '25: Ushering in the Era of Intelligent, Agentic, and Personalized AI

Google I/O '25 marked a pivotal moment in the advancement of artificial intelligence, showcasing how years of foundational research are now rapidly transforming into tangible, intelligent, and deeply personalized experiences for users and developers alike. The keynote illuminated Google's accelerated pace of innovation, emphasizing the delivery of powerful AI capabilities that understand context, anticipate needs, and act autonomously to assist in diverse aspects of daily life.

This year's event unveiled a robust ecosystem of AI-powered tools and platforms, from next-generation large language models and enhanced developer frameworks to a complete overhaul of Google Search and a groundbreaking vision for Extended Reality (XR). The overarching message was clear: Google is committed to making AI a ubiquitous, indispensable, and seamless part of how we interact with technology and the world around us.

The Grand Overture: A Masterpiece of AI-Powered Creativity

The Google I/O '25 keynote began with a breathtaking demonstration of generative AI's creative prowess, immediately immersing the audience in the capabilities of Google's advanced models.

The opening sequence commenced with a simple text prompt: "Text to Video". What followed was the instant generation of a high-quality video segment depicting the "number 10 space station," a clear testament to the power of Google's Imagen and Veo AI models.

This segued into a creative countdown sequence from 10 to 1, where each number was vividly brought to life in unique and dynamic ways: from a jet stream forming the number nine, skydivers creating an eight, to a motorcycle in sand sketching a five. This diverse and often fantastical imagery underscored the versatility and imaginative scope of the video generation technology.

The countdown culminated in a transformation from realistic to fantastical. An initial, seemingly gritty old Western town quickly morphed into a vibrant, surreal parade. This jubilant procession was teeming with a diverse array of animals-elephants, giraffes, zebras, capybaras, and even a pixelated T-Rex-interspersed with whimsical objects like giant chocolate bars, lollipops, and disco balls. This visual storytelling beautifully conveyed themes of imagination, boundary-breaking, and delightful surprises.

The entire sequence was a powerful visual narrative of excitement and wonder, shifting from an empty landscape to a chaotic, colorful celebration. Crucially, on-screen text "Generated with Imagen and Veo" explicitly attributed this visual creativity to Google's AI tools, reinforcing the company's branding. The grand finale, spelling out "WELCOME TO I/O" in celebratory balloons, left no doubt that this was an opening for a major Google event, showcasing its AI advancements with high-quality visuals and dynamic camera work.

The Gemini Era: Unprecedented AI Innovation at Scale

The core message of Google I/O '25 resonated around the "Gemini Era", signifying a new phase where Google's AI advancements, particularly with its Gemini family of models, are not just cutting-edge research but rapidly deployed, intelligent, and highly personalized experiences.

Relentless AI Innovation and Shipping

Sundar Pichai underscored the accelerated pace of innovation, revealing that Google has released over a dozen new models and research breakthroughs, alongside more than 20 major AI products and features, since the last I/O. This rapid deployment signifies a shift where intelligent models can be shipped "on a random Tuesday," rather than exclusively at major events, indicating a fundamental change in development and release cycles.

Unprecedented Model Progress: Gemini Takes the Lead

The Gemini 2.5 Pro model emerged as a dominant force, now sweeping the LMArena leaderboard, achieving the #1 position across all categories. The LMArena is a community-driven benchmark platform used to evaluate and compare the performance of various large language models (LLMs) through head-to-head battles and human preferences. This top ranking is a critical indicator of Gemini Pro's superior capabilities across diverse tasks.

Furthermore, Elo Score Growth illustrates Gemini's rapid improvement. The Elo rating system, originally used for chess, has been adapted to measure the relative skill of LLMs, with higher scores indicating better performance. Gemini Pro models have seen an increase of over 300 Elo points since their first generation, demonstrating significant leaps in capability. In terms of coding excellence, Gemini 2.5 Pro achieved the #1 spot on WebDev Arena, surpassing its previous version by 142 Elo points, and is noted as the fastest-growing model on coding platforms like Cursor. Additionally, Gemini models hold the top three spots for highest output tokens generated per second among top LLMs, cementing their reputation for "Fastest Intelligence."

Gemini 2.5: Pro and Flash

Google DeepMind's advancements highlighted two core Gemini models:

- Gemini 2.5 Pro: Positioned as Google's most intelligent model ever, it continues to top LLM leaderboards for coding and learning (WebDev Arena, LMArena). It introduces Deep Think, an experimental mode that pushes model performance to its limits, achieving groundbreaking results in complex problem-solving domains like mathematics (USAMO 2025), competitive coding (LiveCodeBench), and multimodality (MMMU).

- Gemini 2.5 Flash: An efficient, fast, and cost-effective "workhorse" model, securing the second spot on the LMArena leaderboard. It boasts 22% efficiency gains on evaluations by reducing the number of tokens required for the same performance.

Both Gemini 2.5 Flash and Pro are slated for general availability soon, with previews already accessible in Google AI Studio, Vertex AI, and the Gemini app for developers.

Comparative Overview: Gemini 2.5 Pro vs. Flash

| Feature | Gemini 2.5 Pro | Gemini 2.5 Flash |

|---|---|---|

| Intelligence | Google's most intelligent model, top-tier performance | Highly intelligent, second-ranked on LMArena |

| Primary Focus | Complex reasoning, competitive coding, advanced problem-solving (Deep Think) | Efficiency, speed, cost-effectiveness for high-volume tasks |

| Benchmarks | #1 LMArena, #1 WebDev Arena, 300+ Elo growth | #2 LMArena, 22% efficiency gains |

| Capabilities | Advanced multimodal reasoning, detailed analysis, creative generation | Fast and reliable response generation, suitable for core LLM applications |

| Cost/Latency | Optimized for quality and complexity | Optimized for lower cost and faster inference |

| Availability | General Availability soon (currently preview) | General Availability in early June (currently preview) |

Powering the Future: Advanced AI Infrastructure with Ironwood TPU

Underpinning these remarkable AI advancements is Google's commitment to cutting-edge hardware infrastructure. The keynote introduced "Ironwood," Google's 7th generation Tensor Processing Unit (TPU).

A Tensor Processing Unit (TPU) is an application-specific integrated circuit (ASIC) developed by Google specifically for accelerating machine learning workloads, particularly those involving neural networks. TPUs are designed to handle the large matrix multiplications and convolutions common in AI models much more efficiently than general-purpose CPUs or even GPUs for certain tasks.

Ironwood TPUs are engineered for AI inference at scale, delivering an impressive 10x performance over the previous generation. It boasts an astounding 42.5 exaFLOPS of compute per pod. An exaFLOPS (Execution FLoating-point Operations Per Second) is a measure of a computer's processing speed, representing one quintillion (10^18) floating-point operations per second. This immense processing power signifies a massive leap in Google's ability to run complex AI models with unprecedented speed and efficiency. Ironwood TPUs will be available to Google Cloud customers later this year, further democratizing access to Google's powerful AI infrastructure.

A World Transformed: AI's Massive Adoption and Usage Growth

The impact of Google's AI advancements is clearly reflected in its widespread adoption and exponential usage growth across its products and developer ecosystems.

Monthly AI tokens processed across Google's products and APIs have skyrocketed by approximately 50x in just one year, from 9.7 trillion to over 480 trillion. This metric illustrates the sheer volume of AI computations powering everyday user interactions.

Developer engagement has also seen explosive growth, with over 7 million developers now building with the Gemini API across AI Studio and Vertex AI-a 5x growth since the last I/O. Furthermore, Gemini usage on Vertex AI has increased over 40x, demonstrating strong developer confidence and deployment of Google's AI models.

The Gemini app itself boasts over 400 million monthly active users, particularly with the 2.5 models, signifying strong user engagement with Google's direct AI offerings. Perhaps most impressively, AI Overviews in Search now reach over 1.5 billion users every month, solidifying Google Search's position as the product bringing generative AI to more people than any other in the world.

Infographical Image: AI Adoption & Usage Growth

Redefining Interaction: Groundbreaking Research Becomes Reality

Google I/O '25 transformed ambitious research projects into accessible, real-world applications, profoundly changing how we communicate, work, and interact with information.

Immersive Communication & Real-Time Translation

- Google Beam (from Project Starline): In partnership with HP, Google Beam is an AI-first video communications platform that transforms 2D video streams into a realistic 3D, deeply immersive conversational experience. Utilizing multiple cameras and AI-powered light field displays, this technology creates a sense of presence where participants appear to be in the same room. A light field display captures and projects light from multiple angles, allowing a viewer to perceive a truly three-dimensional image without special glasses, creating a more natural and immersive visual experience. Devices will be available to early customers later this year.

- Real-time Speech Translation in Google Meet: Directly integrated into Google Meet, this feature offers seamless, natural-sounding translation that matches the speaker's tone and style. English and Spanish are now available to subscribers, with more languages and enterprise availability coming soon.

The Universal AI Assistant: Gemini Live and Agentic Capabilities

-

Gemini Live (from Project Astra): Rolling out today on

Android and iOS, Gemini Live leverages Project Astra's camera and

screen-sharing capabilities to allow users to interact with Gemini in

real-time about anything they see. It acts as a universal AI assistant,

offering improved voice output, enhanced memory, and added computer

control.

- Real-world examples: Adding an event to a calendar by pointing the camera at an invitation or transcribing a handwritten shopping list into Google Keep. Gemini Live supports 45+ languages in 150+ countries, with conversations proving 5x longer than text-based interactions.

- Project Mariner (Agent Capabilities): An agentic AI is a type of artificial intelligence that can understand high-level goals and break them down into sub-tasks, plan sequences of actions, execute those actions, and adapt to changing environments to achieve its objectives, often with minimal human intervention. Project Mariner is an agent that can interact with the web to perform complex tasks. It now supports multitasking, overseeing up to 10 simultaneous tasks, and features "Teach and Repeat" functionality, allowing it to learn tasks by demonstration. These capabilities are coming to the Gemini API for developers this summer.

- Agent Mode in the Gemini App: An experimental version of this mode will allow Gemini to handle multi-step tasks, such as finding apartments based on detailed criteria, browsing listings, and even scheduling tours on the user's behalf. This advanced functionality is coming soon to subscribers.

- Personalized Smart Replies in Gmail: AI-powered smart replies that leverage personal context from across your Google apps (with permission) to generate responses that match your tone, style, and draw on relevant personal information (e.g., past travel itineraries). This feature will be available in Gmail this summer for subscribers.

Developer-Focused Enhancements

- Native Audio Output: Gemini 2.5 introduces first-of-its-kind multi-speaker support (two distinct voices) for text-to-speech. This allows for expressive, nuanced conversations, including whispers and seamless language switching across 24+ languages, available in the Gemini API today.

- Enhanced Security & Transparency: Gemini 2.5 is Google's most secure model to date, featuring strengthened protections against threats like indirect prompt injections. A prompt injection attack is a vulnerability in AI systems where malicious inputs are crafted to override or manipulate the AI's intended instructions, making it perform unintended actions or reveal sensitive information.

- Thoughts (Experimental): This new experimental feature provides structured summaries of the model's internal reasoning (headers, key details, tool calls), offering increased transparency and aiding in debugging for developers.

- Thinking Budgets: Coming soon to Gemini 2.5 Pro, this feature provides developers with control over the number of tokens the model uses for "thinking," allowing optimization for cost, latency, or quality.

Creative & Practical Developer Applications

- Code Generation from Images/Sketches: Gemini 2.5 Pro can turn abstract sketches into functional 3D code, such as an interactive 3D photo gallery or complex 3D city simulations.

- Multimodal Reasoning: Multimodal AI refers to artificial intelligence systems that can process and understand information from multiple types of data, such as text, images, audio, and video, integrating them to gain a more comprehensive understanding and generate richer responses. Gemini demonstrates vast multimodal reasoning powers, from building games/apps in a single shot to unpacking scientific papers and understanding YouTube videos.

- Jules (AI Coding Agent): Now in public beta, Jules is an asynchronous coding agent that can plan, modify files, fix bugs, and update large codebases (e.g., Node.js upgrades) autonomously in minutes, integrating with GitHub.

- Gemini Diffusion: A new experimental text-to-text diffusion model for text editing (math, code) that iterates quickly, error-corrects during generation, and is 5x faster than previous fastest models. Diffusion models are a class of generative AI models that learn to create data (like images or text) by iteratively refining random noise until it resembles data from the training set, effectively "denoising" the input into a coherent output.

The Evolution of Search: From Information to Intelligence

Google Search, a cornerstone of Google's mission, is undergoing a "total reimagining" powered by AI, moving beyond simply organizing information to delivering intelligent, agentic, and personalized results.

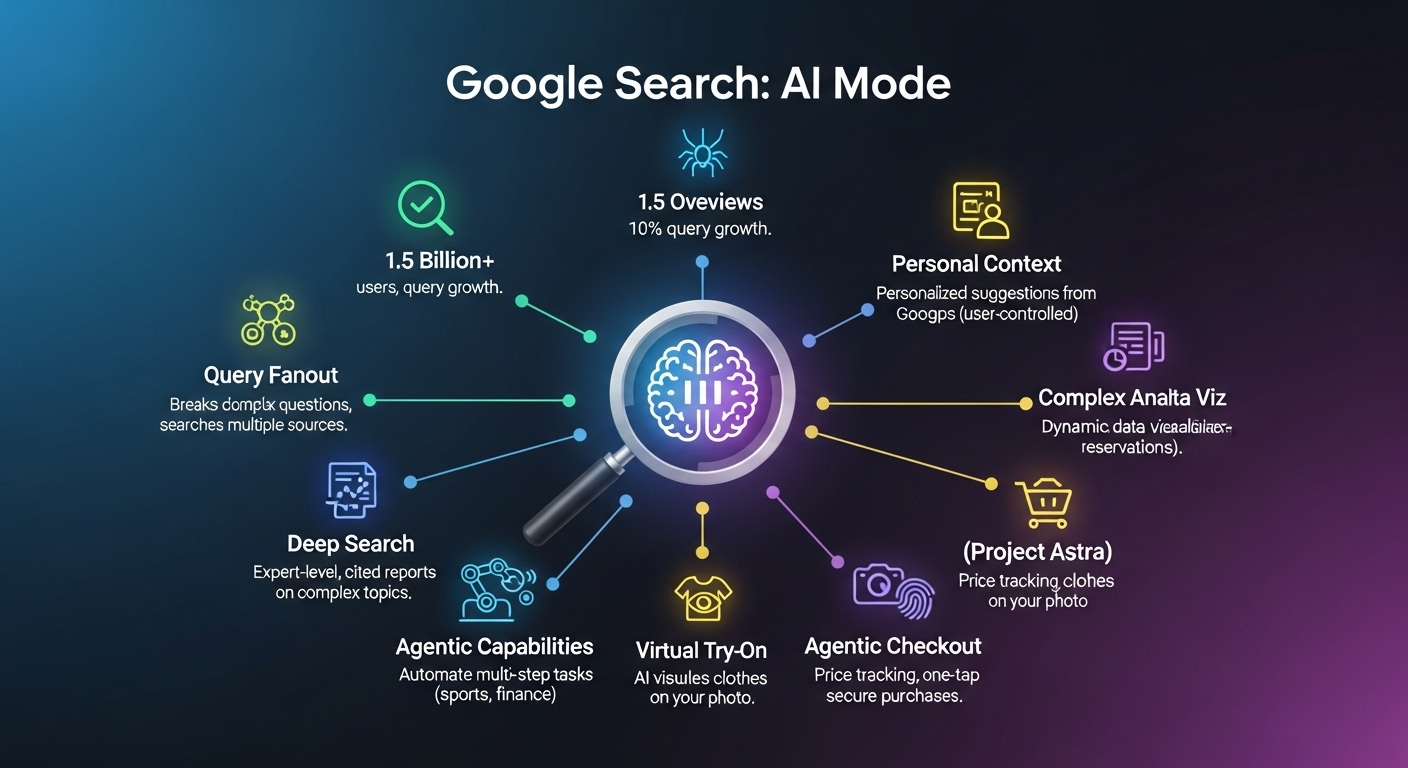

AI Mode: The Future of Search

Building on the success of AI Overviews, which are now used by over 1.5 billion people monthly and have driven over 10% growth in user queries, Google is rolling out AI Mode to everyone in the U.S. This represents an end-to-end AI search experience, powered by Gemini 2.5.

AI Mode utilizes a custom Gemini version and a sophisticated technique called "Query Fanout." This technique breaks down complex questions into sub-topics and simultaneously searches across various data sources (web, Knowledge Graph, local data, Shopping Graph, Maps community, sports, finance) to provide comprehensive, verified responses.

Coming this summer, Personal Context will enhance AI Mode by offering personalized suggestions based on past searches and connected Google apps (starting with Gmail), with user-controlled opt-in/out. Deep Search will extend the Query Fanout technique, performing dozens or hundreds of searches to generate expert-level, fully cited reports on complex topics in minutes. Additionally, for sports and financial queries, Complex Analysis & Data Visualization will provide dynamic data visualization (e.g., tables, graphs) and intricate analysis.

Project Mariner's agentic capabilities are also coming to AI Mode this summer, allowing Search to automate multi-step tasks like finding and purchasing event tickets, making restaurant reservations, or booking local services, all under user guidance. Furthermore, Project Astra's Search Live will offer real-time, live conversational and visual search capabilities, enabling users to interact with Search via their camera for immediate, context-aware assistance on tasks like DIY projects.

New Ways to Shop with AI

Google is introducing a new level of intelligence for shopping, combining visual inspiration from Google Images with the extensive Shopping Graph (over 50 billion product listings).

- Virtual Try-On: Rolling out in Labs in the U.S., users can virtually try on clothes using their own photos. AI visualizes how fabrics drape and fit on their unique body shape, utilizing advanced 3D shape understanding and diffusion models.

- Agentic Checkout: In the coming months, this feature will allow Search to track prices and make purchases securely on the user's behalf with a single tap, using integrated payment options like Google Pay.

Generative Media: Expanding the Horizons of Creativity

Google's commitment to generative media is empowering creators, musicians, and filmmakers by providing advanced tools that seamlessly integrate into the artistic process.

Music AI: Lyria and the Music AI Sandbox

Google's Music AI Sandbox is a tool designed for professionals to explore the possibilities of Lyria 2, its generative music model. Lyria 2 can create high-fidelity music and professional-grade audio, including melodic vocals, solos, and choirs. Lyria 2 is available today for enterprises, YouTube creators, and musicians, offering new avenues for musical expression and production.

SynthID: Ensuring Transparency in AI-Generated Content

Google pioneered SynthID, a technology that embeds invisible watermarks into generated media, making it detectable even after modifications. Over 10 billion pieces of content have been watermarked to date. Google is partnering with GetReal! and NVIDIA to increase watermarked content and detection capabilities. A new SynthID Detector can identify these watermarks in images, audio, text, or video, even if only a portion of the content is present. The SynthID Detector is rolling out to early testers today.

Veo and Flow: AI for Filmmaking

Google is collaborating with visionary directors like Darren Aronofsky (Founder, Primordial Soup) to shape Veo's capabilities for storytellers, ensuring that artists remain in the driver's seat of innovation. Veo assists in combining live-action performance with AI-generated video, enabling the creation of microscopic worlds, cosmic events, or historical narratives. It offers reference-powered video, maintaining character, scene, and style consistency using user-provided inputs. Precise camera controls allow filmmakers to direct Veo along specific paths (zoom, rotate, move), fluidly expressing their ideas. Veo 3, the latest iteration, significantly advances photorealism and physical understanding, and crucially, can generate sound effects, background sounds, and even dialogue directly within the video, allowing characters to speak. Veo 3 is available today.

Flow is a new AI filmmaking tool that combines the best of Veo, Imagen, and Gemini, built by and for creators. It provides an intuitive interface for visual storytelling. Users can upload or generate images (using Imagen) as "ingredients," create custom elements by describing them, and assemble clips with text prompts, including precise camera controls. Flow maintains character and scene consistency, and allows users to trim or extend clips to achieve desired effects. Flow is launching today.

Google AI Subscription Plans: Accessing Cutting-Edge AI

Google is making its advanced AI capabilities accessible through a tiered subscription model, catering to different user needs from general enthusiasts to leading-edge developers.

| Plan | Monthly Price | Key Features | Availability |

|---|---|---|---|

| Google AI Pro | $19.99 (1-month free trial) | Full suite of AI products, higher rate limits, special features. Includes: Gemini app (formerly Gemini Advanced), Flow with Veo 2, Whisk with Veo 2, NotebookLM with higher limits, Gemini in Gmail, Docs, Vids & more, Gemini in Chrome, 2 TB Storage. | Globally |

| Google AI Ultra | $249.99 (50% off for first 3 months) | For trailblazers and pioneers who want cutting-edge AI. Highest rate limits, earliest access to new features and products across Google. Includes: Gemini app with 2.5 Pro Deep Think & Veo 3, Flow with Veo 3 (available today), Whisk with highest limits, NotebookLM with highest limits (coming soon), Gemini in Gmail, Docs, Vids & more, Gemini in Chrome, Project Mariner, YouTube Premium, 10 TB Storage. | US today, rolling out to other countries soon |

The Ultra plan is described as a "VIP pass" for Google AI, offering the most advanced features and earliest access to upcoming innovations.

Android XR: The Next Frontier of Spatial Computing

Android, already the platform where "the future is seen first," is extending its reach into the realm of Extended Reality (XR), a new platform built in the "Gemini era" to power emerging form factors.

Extended Reality (XR) is an umbrella term that covers all real-and-virtual combined environments and human-machine interactions generated by computer technology and wearables, including augmented reality (AR), virtual reality (VR), and mixed reality (MR).

Expanding Android's AI-First Ecosystem

Recent updates to Android 16 and Wear OS 6 were highlighted, alongside the promise that many Gemini AI breakthroughs, including Project Astra's visual AI capabilities, are coming soon to Android. Gemini is already accessible from the power button on Android devices, offering contextual assistance across watches, car dashboards, TVs, and more.

Android XR: A Spectrum of Devices

Android XR supports a broad range of devices, each catering to different needs throughout the day, from immersive experiences (movies, gaming, work) to lightweight, on-the-go assistance:

- Video See-through (VSTR) Headsets: Offer a camera-mediated view of the real world with overlaid digital content.

- Optical See-through (OSTR) Headsets: Provide a direct view of the real world with digital overlays projected onto the lenses.

- AR Glasses: Lightweight glasses designed to overlay digital information onto the real world.

- AI Glasses: Minimalist glasses focused on AI-powered assistance and discreet interactions.

Key Collaborations and Developer Support

Android XR is developed in collaboration with Samsung and optimized for Snapdragon with Qualcomm, ensuring powerful and efficient performance. Hundreds of developers are already building for the Android XR platform, and Google is reimagining its core apps (YouTube, Maps, Chrome, Gmail, Photos) for XR. Importantly, existing mobile and tablet Android apps will also work on XR devices.

Live Demonstrations of Android XR in Action

- Samsung's Project Moohan (Headset): Introduced as the "first Android XR device," it offers an "infinite screen" to explore apps with Gemini. Demos included "teleporting" anywhere in the world with Google Maps and asking Gemini questions about the location, pulling up immersive videos and websites. The MLB App provided a front-row, immersive baseball viewing experience with real-time player and game stats via Gemini interaction. Project Moohan will be available later this year.

-

Google's Android XR Glasses: Building on Google's

decade-plus history in building glasses, these lightweight devices are

designed for all-day wear, packed with technology (front-facing camera,

microphones, open-ear speakers, optional discreet in-lens display).

Functionality includes:

- Receiving texts hands-free.

- Muting notifications with voice commands.

- Visual AI identification (e.g., identifying a famous basketball player, recognizing a band from a photo wall and finding related performances).

- Playing music on demand.

- Hands-free navigation with heads-up directions and a 3D map.

- Scheduling events directly from context.

- Taking photos with voice commands.

- Real-time Live Translation: Demonstrated speaking Farsi to English and Hindi to English in real-time, appearing directly in the glasses, offering a glimpse into seamless global communication.

The Future of Wearables

Google emphasizes the need for stylish glasses that users will want to wear all day, announcing Gentle Monster and Warby Parker as the first eyewear partners. Developers will be able to start building for Android XR glasses later this year.

The Next Chapter of AI: Vision for a Transformative Future

Google I/O '25 culminated in a powerful vision for the "Next Chapter of AI," underscoring Google's long-standing legacy in foundational AI and its commitment to tackling humanity's most pressing challenges.

Deep Think, World Models, and Robotics

The keynote provided glimpses into the future, including:

- Deep Think (2.5 Pro): A new mode pushing model performance to its limits, achieving groundbreaking results in complex problem-solving.

- World Models: Google's next chapter for Gemini involves building AI that can understand and simulate the world, make plans, and imagine new experiences (e.g., Genie 2 generating playable 3D environments from image prompts).

- Gemini Robotics: A specialized model that enables robots to understand the physical environment and perform useful tasks (grasping, following instructions, adapting to novel tasks on the fly).

- Project Astra: The ultimate vision for the Gemini app – a universal AI assistant that is personal, proactive, and powerful, with capabilities like improved voice output, enhanced memory, and computer control. Many Project Astra innovations will gradually integrate into Gemini Live and other products, including Android XR glasses.

Foundational AI Legacy

Google's AI journey spans decades, from pioneering the Transformer architecture (which underpins all large language models) to groundbreaking achievements like AlphaGo, AlphaFold (for protein folding), and PaLM. This consistent dedication to pushing the frontiers of AI research is driving towards Artificial General Intelligence (AGI).

AI for Global Impact

The keynote highlighted AI's role in addressing real-world problems:

- FireSat Initiative: Demonstrated the use of AI and multi-spectral satellite imagery for early wildfire detection, capable of spotting fires as small as 270 sq ft and reducing response time from 12 hours to 20 minutes, showcasing AI's potential in disaster management.

- Wing Drone Delivery: Showcased how AI-powered drone deliveries provided critical relief efforts (food, medicine) during Hurricane Helene, in partnership with Walmart and the Red Cross, emphasizing AI's humanitarian applications.

Sundar Pichai emphasized that these significant advancements are expected within "years, not decades," sharing a personal anecdote of his parents being amazed by a Waymo ride, illustrating the profound and inspiring impact of technology. Waymo is an autonomous driving technology development company, a subsidiary of Alphabet Inc., that is developing self-driving cars.

Conclusion: AI from Research to Reality

Google I/O '25 was a comprehensive declaration of the "Gemini Era"-a period defined by Google's commitment to transforming cutting-edge AI research into practical, intelligent, and deeply personal experiences. From the stunning AI-generated opening video to the unveiling of the Ironwood TPU, the sweeping advancements in Gemini models, the re-imagined Google Search with AI Mode, the expansive Android XR platform, and the visionary applications in generative media and global impact initiatives, every announcement underscored a future where AI is seamlessly integrated into every facet of our lives.

The recurring themes of personalization, proactivity, and unprecedented power demonstrate Google's intent to deliver an AI that doesn't just respond but truly understands, anticipates, and empowers users and developers. As Google continues to push the boundaries of AI, the promise of a more intelligent, helpful, and transformative future appears closer than ever.

Further Reading

- The Architecture of Transformer Models in AI

- Understanding Large Language Model Benchmarks and Leaderboards

- The Role of Tensor Processing Units (TPUs) in AI Development

- Ethical Considerations and Security in Generative AI

- The Future of Extended Reality (XR) and Spatial Computing

- AI's Impact on Creative Industries: Music, Art, and Film

- Agentic AI: How Autonomous AI Systems Work

- Applications of Multimodal AI in Real-World Scenarios