Federated Learning at Scale

The landscape of artificial intelligence is rapidly evolving, driven by an insatiable demand for smarter, more personalized experiences. However, this evolution faces a significant hurdle: the ever-increasing need for data privacy and security. Traditional machine learning (ML) models often rely on centralizing vast amounts of user data, a practice that is becoming increasingly untenable due to regulatory restrictions and growing public concern over data protection. This challenge has paved the way for Federated Learning (FL), a paradigm that allows AI models to be trained on decentralized data, right where it originates, without ever compromising user privacy.

This post delves into the intricacies of federated learning at scale, exploring its core principles, unique challenges, and the innovative solutions driving its advancement.

What is Federated Learning (FL)?

Federated Learning is a collaborative, privacy-preserving distributed training approach for machine learning models. Unlike conventional methods where data from various sources is collected into a central repository for training, FL operates differently:

- Data never leaves the device where it is generated. This is the cornerstone of FL's privacy guarantee.

- Instead of raw data, devices communicate model updates (e.g., gradients or parameter changes) to a central server.

- The central server aggregates these updates to refine a global model, which is then sent back to the devices for further local training. This iterative process inherently promotes privacy by design.

Why Federated Learning? The Drive Towards Privacy and Edge Intelligence

The motivation for federated learning stems from two critical trends:

- Shift from Cloud ML to Edge ML: Data generation is increasingly moving to "the edge" – smartphones, smartwatches, home assistants, and AR/VR devices. Training models closer to where the data is created reduces latency, saves bandwidth, and opens doors for real-time applications.

- Privacy and Security Concerns: With stringent regulations like GDPR and HIPAA, and a growing societal demand for data protection, centralizing sensitive user data is becoming less feasible. FL enables organizations across sectors like tech services, healthcare, and finance to train powerful models without the privacy risks associated with data centralization. For example, Google's Gboard uses FL to improve predictive text and suggestions on smartphones without uploading user keystrokes, and Apple applies a similar approach for Siri.

FL vs. Traditional Distributed Optimization: A Fundamental Difference

While both federated learning and traditional distributed optimization involve multiple machines collaborating, their fundamental assumptions and challenges diverge significantly:

| Feature | Traditional Distributed Optimization | Federated Learning |

|---|---|---|

| Data Access | Centralized access to all data. | Decentralized: Data remains on local devices. |

| Data Handling | Can randomly permute and distribute data (IID subsets). | Cannot access or randomly permute raw data. Inherently non-IID. |

| Communication | Transparent communication of model updates (gradients, parameters). | Privacy-preserving communication required; raw updates can be risky. |

| Primary Challenges | Communication overhead, variable delays. | Privacy, data heterogeneity, system heterogeneity, communication efficiency. |

| Data Privacy | Minimal inherent privacy; relies on secure infrastructure. | Core principle: Designed for privacy from the ground up. |

The primary difference lies in privacy: FL's design is dictated by the imperative to keep raw data on local devices, introducing unique challenges that traditional distributed systems do not face.

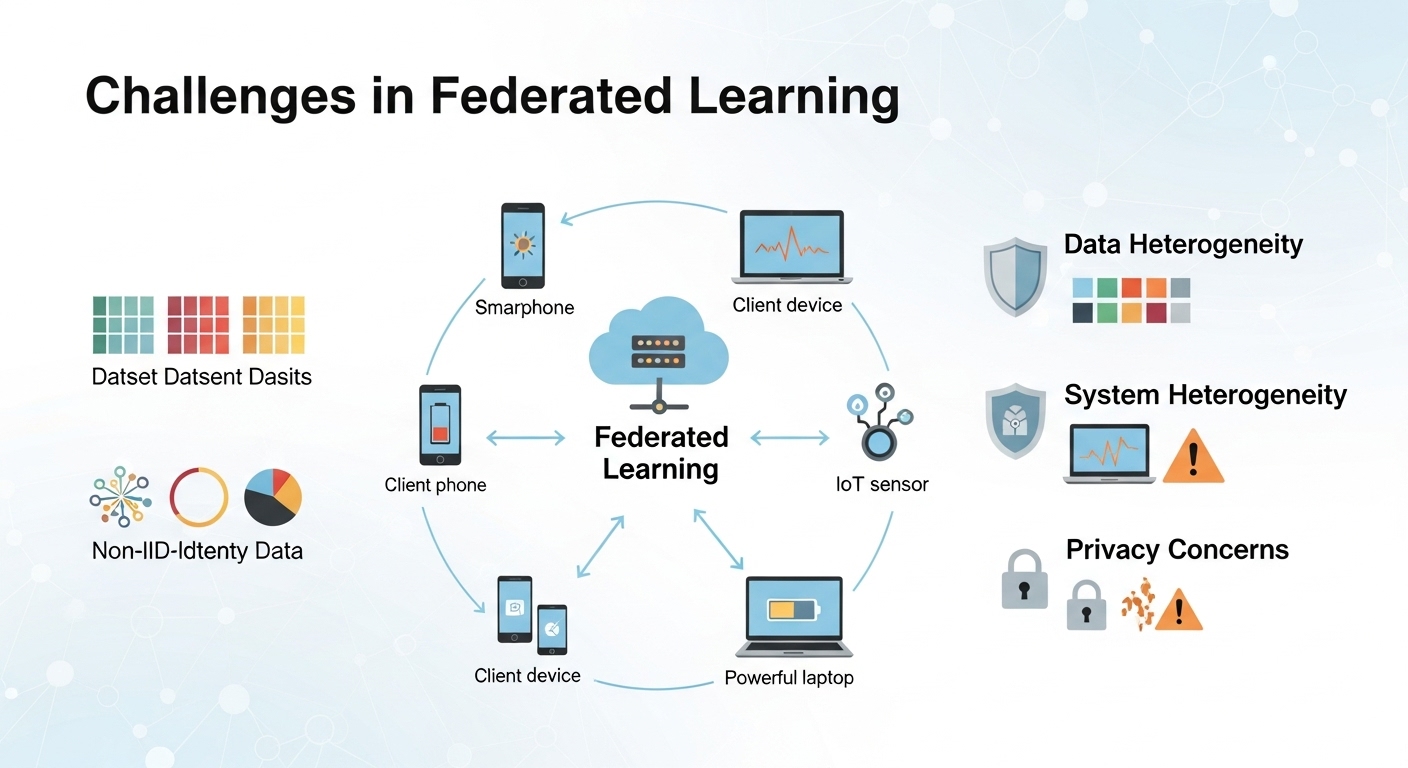

Key Challenges in Federated Learning: Navigating the Privacy Imperative

Even when data remains on devices, sharing model updates can still pose privacy risks. For instance, Gradient Inversion Attacks demonstrate that model updates, even gradients, can inadvertently leak reconstructable information about a client's private training data. This could mean recreating an image from its gradient or inferring sensitive information.

To counter such sophisticated attacks, FL employs critical privacy-enhancing technologies:

- Secure Aggregation (SA): This cryptographic technique combines model updates from multiple clients in a way that individual contributions are protected, preventing the server (or any single party) from seeing any client's unmasked update. The server only sees the aggregate result.

- Differential Privacy (DP): DP adds carefully calibrated noise to model updates (or to the data itself) to provide strong, mathematically provable privacy guarantees. This makes it extremely difficult to infer individual data points, even when observing the aggregated model. DP can be applied either locally (on the client device) or centrally (by the server during aggregation), with a trade-off between privacy strength and model utility.

Beyond privacy, FL environments introduce several practical complexities:

- Data Imbalance: Clients often possess vastly different amounts of local data.

- Data Heterogeneity (Non-IID): The data distributions vary significantly across clients, meaning different devices might see different patterns.

- Device Availability: Clients can be intermittently online, connecting and disconnecting unpredictably.

- Device Capabilities: There's a wide spectrum of computational power and memory, from high-end servers to low-power IoT devices.

- Low-Bandwidth Communications: Updates are frequently sent over mobile networks or Wi-Fi, which can be slow and unreliable.

- System Heterogeneity: Diverse hardware and software configurations across clients add another layer of complexity.

Two Main Types of Federated Learning

Federated learning manifests in two primary scenarios, each with distinct characteristics and challenges:

| Feature | Cross-Device FL | Cross-Silo FL |

|---|---|---|

| Scale | Hundreds of millions to billions of devices | Tens to hundreds of organizations/silos |

| Data per Device | Small amount | Moderate to large amount |

| Capabilities | Low-power, limited compute/memory | High-power, server-grade resources |

| Communication | Low-bandwidth, intermittent availability | High-bandwidth, always available |

| State | Typically stateless (devices don't store long-term) | Potentially stateful (institutions may maintain persistent state) |

| Examples | Smartphone keyboards (Gboard), voice assistants (Siri, "Hey Google" detection) | Healthcare (hospitals collaborating on medical data), finance (banks detecting fraud), industrial IoT |

Synchronous Federated Learning Training Protocol (Cross-Device Focus)

The most common FL protocol, especially for cross-device scenarios, operates synchronously in rounds:

graph TD

subgraph Central Server

S[Start: Initialize Global Model] --> S1{Select Clients}

S1 --> S2{Send Global Model to Clients}

S3{Aggregate Updates from Clients} --> S4[Update Global Model]

S4 --> S5{Repeat or Deploy}

end

subgraph Multiple Clients

C1[Client 1: Receive Global Model] --> C1a[Train Locally on Private Data]

C2[Client 2: Receive Global Model] --> C2a[Train Locally on Private Data]

C_N[Client N: Receive Global Model] --> C_Na[Train Locally on Private Data]

C1a --> C1b[Send Model Difference/Update]

C2a --> C2b[Send Model Difference/Update]

C_Na --> C_Nb[Send Model Difference/Update]

end

S2 --> C1; S2 --> C2; S2 --> C_N;

C1b --> S3; C2b --> S3; C_Nb --> S3;

Process Flow:

- Clients Indicate Readiness: Devices signal their availability (e.g., connected to power, on Wi-Fi, idle, user consent given).

- Server Sends Model: The central server transmits the latest global model to a selected group of ready clients.

- Clients Local Training: Each client performs several local model updates (e.g., Stochastic Gradient Descent steps) on its private data.

- Clients Send Updates: Clients compute and send their model differences (updates) back to the server.

- Server Aggregation & Update: The server aggregates these model differences (often using Secure Aggregation) and updates the global model. This process then repeats for new rounds until convergence or a stopping criterion is met.

Local Update Methods: Balancing Communication and Convergence

A key innovation in FL, particularly for reducing communication overhead, is allowing clients to perform multiple local training steps (e.g., mini-batch SGD steps) on their data before sending an update. This approach, often termed Federated Averaging (FedAvg) or Local SGD, significantly reduces the frequency of communication rounds between clients and the central server.

However, this strategy introduces a challenge: model "drift". If local models train for too long on diverse local datasets, they can diverge significantly from the global model, potentially slowing down overall convergence or degrading performance. To mitigate this:

- FedProx: Introduced by Li et al. (2018), FedProx adds a proximal term to the local optimization objective. This term acts as a regularizer, encouraging client models to stay closer to the global model, thereby stabilizing training in heterogeneous (non-IID) data environments.

- SCAFFOLD: Karimireddy et al. (2020) proposed SCAFFOLD (Stochastic Controlled Averaging for Federated Learning), which uses "control variates" (estimates of gradient directions) on both the server and clients to correct for client drift, accelerating convergence by reducing variance between client updates.

- Other methods like MIME (momentum-based variance reduction), FedNova and FedLin (correcting for varying local update counts), and FedShuffle (addressing finite dataset shuffling) continue to refine local update strategies.

Secure Aggregation Explained: Protecting Individual Contributions

Secure Aggregation is a

cryptographic cornerstone for privacy in FL. It enables the central server

to compute the sum of client contributions without seeing individual ones.

This works by clients adding random, symmetric "masks" to their model

updates. These masks are generated in such a way that they sum to zero (or

a known constant) when all participating clients' updates are aggregated.

Therefore, the server obtains the sum of updates, and the individual

privacy of each client's contribution is preserved. Initial proposals for

secure aggregation involved communication complexities of

O(N^2) (where N is the number of clients), but more advanced

methods have achieved O(N log N) or even O(N) by

structuring communication as a graph.

sequenceDiagram

participant Client1 as Client 1

participant ClientN as Client N

participant SA as Secure Aggregation Service

participant Server as Central Server

Server->>Client1: Global Model (Wt)

Server->>ClientN: Global Model (Wt)

Client1->>Client1: Local Training (on private data)

ClientN->>ClientN: Local Training (on private data)

Client1->>Client1: Compute Update ΔW1 + Mask1

ClientN->>ClientN: Compute Update ΔWN + MaskN

Client1->>SA: Send (ΔW1 + Mask1)

ClientN->>SA: Send (ΔWN + MaskN)

SA->>SA: Sum all (ΔWi + Maski)

SA->>Server: Send Sum(ΔWi) (masks cancel out)

Server->>Server: Update Global Model (Wt+1)

Server-->>Client1: New Global Model (Wt+1)

Server-->>ClientN: New Global Model (Wt+1)

note right of Server: Repeat for next round

Mechanism:

- Quantization and Finite Field Arithmetic: Clients quantize their model updates and perform arithmetic over a finite field (e.g., integers modulo a prime number Q).

- Random Mask Generation: Each client generates a random "mask" (noise) and exchanges random seeds (keys) for these masks with other participating clients. These masks are designed to cancel each other out when summed.

- Masked Update Transmission: Clients send their local model update, masked by these random values, to the central server.

- Server Aggregation: The server receives and sums the masked updates. Crucially, due to the properties of the masks, they cancel each other out in the sum, revealing only the aggregate model update (e.g., (Update1+Mask1) + (Update2+Mask2) = (Update1+Update2) + (Mask1+Mask2); if Mask1+Mask2 = 0, only Update1+Update2 is revealed).

- This protocol ensures that neither individual clients (who only see their own masked update and shared seeds) nor the server (who only sees the sum of masked updates and later, the sum of seeds) can inspect any single client's unmasked model update.

Practical Challenges in FL: The "Straggler" Problem

While synchronous FL provides a clear training rhythm, it introduces significant practical hurdles at scale:

- Numerical Mismatch: ML models typically use floating-point numbers, while cryptographic protocols for secure aggregation often operate over integers or finite fields, requiring careful conversions.

- Centralized Communication: Mobile devices usually communicate only through a central server, not peer-to-peer, complicating some secure aggregation schemes that assume direct client-to-client interaction.

- Flaky Clients: Devices frequently drop out mid-protocol due to network issues, battery depletion, or user activity, necessitating robust fault-tolerance mechanisms.

- Iterative & Latency-Sensitive: FL is an iterative process, and low latency per round is crucial for timely model training.

The most prominent issue in synchronous FL is the "straggler" problem. Synchronous rounds are bottlenecked by the slowest client. Client execution times are highly heterogeneous, varying by orders of magnitude (from sub-second to hundreds of seconds). This leads to significantly long round durations.

One common, but problematic, solution is to set a timeout to filter out slow clients. While this can reduce training time (e.g., from 130 hours to 19 hours), it negatively impacts model quality, especially for specific user segments. Filtering out stragglers can introduce bias, particularly affecting data-rich clients who might also be slower, leading to degraded model performance for those groups (e.g., perplexity of top 1% users degrading from 47 to 73).

Asynchronous Federated Learning: Introducing FedBuff

To address the straggler problem and the latency bottleneck, Asynchronous Federated Learning allows clients to operate at their own pace. A key challenge with asynchronous updates is that they can become "stale" (based on older global model versions) if clients take too long to return their updates.

FedBuff is a buffered asynchronous aggregation method that combines the benefits of both synchronous and asynchronous approaches, while being compatible with privacy-preserving technologies like Secure Aggregation and differential privacy. It leverages Trusted Execution Environments (TEEs) for secure aggregation.

Trusted Execution Environments (TEEs)

Trusted Execution Environments (TEEs) are secure hardware areas within a main processor that guarantee confidentiality and integrity for code and data, even if the host operating system is compromised. The principle is "What happens in the TEE stays in the TEE." Examples include Intel SGX, ARM TrustZone, and AMD SEV.

FedBuff Secure Aggregation Flow

FedBuff's approach to secure aggregation using TEEs is designed for efficiency and privacy:

sequenceDiagram

participant C as Client Device

participant S_Aggr as Server Aggregator

participant TEE as Trusted Execution Environment

C->>C: Generate Local Update (ΔW)

C->>C: Generate Random Mask (M)

C->>C: Encrypt (ΔW + M)

C->>S_Aggr: Send Encrypted (ΔW + M)

C->>TEE: Send Key/Seed for M (secure channel)

loop Asynchronous Buffering & Aggregation

S_Aggr->>S_Aggr: Collect multiple Encrypted (ΔW + M) in buffer

alt Threshold of updates reached

S_Aggr->>TEE: Send buffered Encrypted Updates

TEE->>TEE: Use Keys/Seeds to Decrypt & Remove Masks

TEE-->>S_Aggr: Send Aggregated Unmasked Update (ΣΔW)

S_Aggr->>S_Aggr: Update Global Model

end

end

Process Flow:

- Client Generates Update & Mask: A client computes its model update and generates a random mask.

- Encrypted Update to Aggregator: The client encrypts its masked update and sends it to the Aggregator (running on the server's CPU).

- Mask Seed to TEE: Simultaneously, the client sends only the seed for its random mask over a secure channel (e.g., TLS) directly to a TEE associated with the Aggregator.

- Buffer Accumulation: The Aggregator's CPU accumulates multiple encrypted updates in a buffer.

-

TEE Aggregation: Once a sufficient number of updates (a

threshold

K) are collected, the TEE uses the collected seeds to generate the aggregate mask. It then unencrypts the aggregated updates, subtracts the aggregate mask, and applies the combined update to the global model. - Model Release: The unmasked, aggregated global model is then released from the TEE.

This design is efficient because only the compact random seed (not the large model update itself) is processed within the potentially memory-constrained TEE, reducing I/O bottlenecks.

FedBuff Performance Benefits

FedBuff demonstrates significant improvements over traditional synchronous FL, specifically addressing heterogeneity without sacrificing valuable data from slower clients:

| Metric | Basic Synchronous FL (w/o timeouts) | Synchronous FL (with timeouts) | FedBuff (Asynchronous w/ TEE) |

|---|---|---|---|

| Training Time | 130 hours | 19 hours | 18 hours |

| Average Perplexity | (Not specified for comparison) | (Degraded due to bias) | 57 |

| Top 1% Users Perplexity | (Not specified for comparison) | 73 (Degraded) | 39 (Improved) |

| Client Updates Processed | Lower | Lower (due to filtering) | Significantly Higher |

| Bias against Stragglers | Present | High | None |

FedBuff achieves faster training (e.g., 18 hours vs. 130 hours for basic FL) and importantly, yields significantly better model quality across all client segments, particularly for critical user groups, by avoiding the bias introduced by filtering out stragglers. This efficiency is achieved by allowing many more client updates to contribute within a shorter training period.

Personalization in Federated Learning: Beyond One-Size-Fits-All

As FL matures, the focus is shifting beyond training a single global model to personalizing models for individual users or devices. A "one-model-for-all" approach often falls short due to the inherent data heterogeneity across clients.

Partial Model Personalization is a promising direction, involving the decomposition of a model into:

- Shared Parameters: Common across all devices and updated through federated aggregation.

- Personal Parameters: Specific to each device, optimized locally on individual user data.

Architectural choices for partitioning shared and personal parameters include:

- Shared feature extractor + personal classifier.

- Personal feature extractor + shared classifier.

- Using "adapters" or small modules within layers that are personalized.

The optimal choice is often task-dependent. Optimization strategies generally find that alternating updates for shared and personal parameters lead to better performance than simultaneous updates. Techniques like meta-learning for personalization also aim to learn a global model that can rapidly adapt to individual client needs with minimal local fine-tuning.

Open Research Directions in Federated Learning

The field of federated learning is vibrant with ongoing research, addressing its complexities and pushing its boundaries. Key open challenges include:

- Privacy and Personalization: How to achieve robust privacy guarantees while still allowing for highly personalized models, especially for unique or outlier data.

- Statistical Learning Theory: Developing a deeper theoretical understanding of personalization in FL and its convergence properties under heterogeneity.

- Beyond Local Update Methods: Exploring novel aggregation and training strategies (e.g., ensemble distillation) that go beyond the current FedAvg variations.

- Training Large Models on Constrained Devices: Overcoming resource limitations (memory, compute) on tiny, heterogeneous edge devices for increasingly complex models.

- Label Sourcing: Addressing the challenge of obtaining labels on devices, whether through implicit user feedback, proxy data, or expert annotations.

- Advanced Privacy Frameworks: Investigating alternatives or enhancements to existing privacy frameworks like differential privacy, potentially integrating new cryptographic primitives.

- Privacy and Compression: Understanding the intricate interplay between privacy-enhancing techniques (often involving noise) and model compression (which also involves information reduction).

- Deployment Challenges: Leveraging robust distributed systems principles to handle unreliability, scaling to billions of devices, and ensuring fault tolerance in real-world FL deployments.

Conclusion

Federated learning represents a transformative approach to AI, enabling collaborative model training while rigorously upholding data privacy. By keeping sensitive information on individual devices and sharing only aggregated model updates, FL unlocks the potential of decentralized data at an unprecedented scale. From addressing the nuances of data heterogeneity and device capabilities to pioneering solutions like asynchronous aggregation with Trusted Execution Environments, the field is continuously innovating. As research progresses into personalization techniques and new privacy frameworks, federated learning is set to redefine how AI models are built, ensuring that the next generation of intelligent applications are not only powerful but also inherently private and secure.

Further Reading

- Secure Multi-Party Computation in Federated Learning

- Homomorphic Encryption Applications in AI

- The Role of Edge Computing in AI Development

- Model Compression Techniques for On-Device AI

- Fairness and Bias Mitigation in Federated Learning